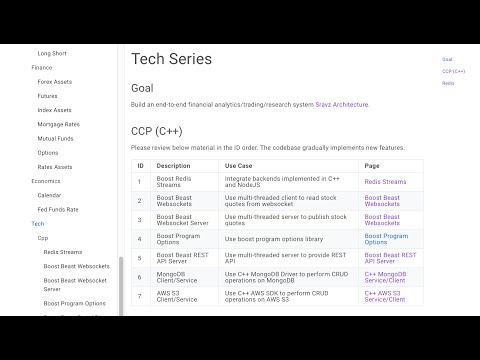

Use Case

Historical Ticker Plant

- Store historical daily and intraday asset quotes and trades data

- Use Polars and DuckBD to perform various analytics on the data

Tools Used

- Polars

- Duck DB

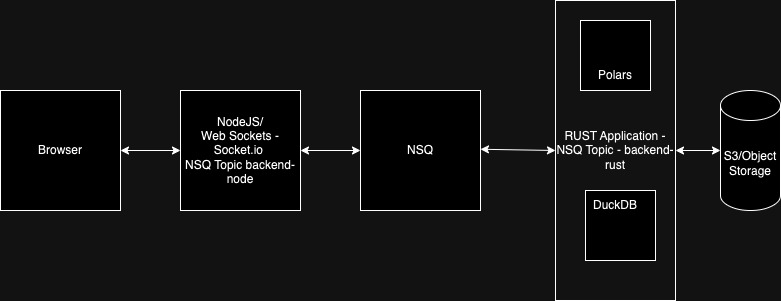

Dataflow Diagram

Session 1

Topics Discussed

- Rust Application Setup

- Interaction between NSQ and Rust Application (Demo)

Video explanation

Source Code

https://github.com/sravzpublic/training/tree/master/training-rust/sravz

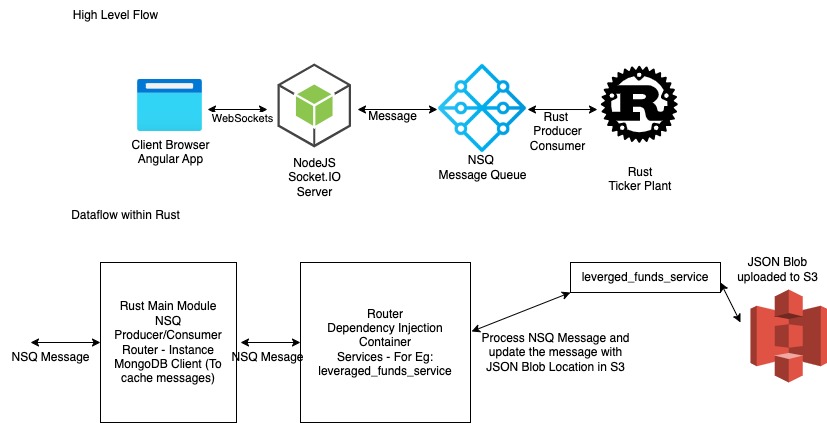

Session 2

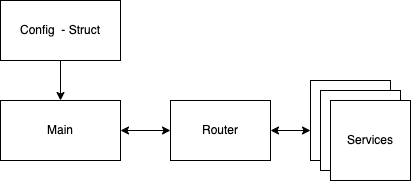

Dataflow Diagram

Topics Discussed

- Ticker plant internal components

- Main Module

- Router

- Services

- Demo of Leveraged Funds service usage

Video explanation

Source Code

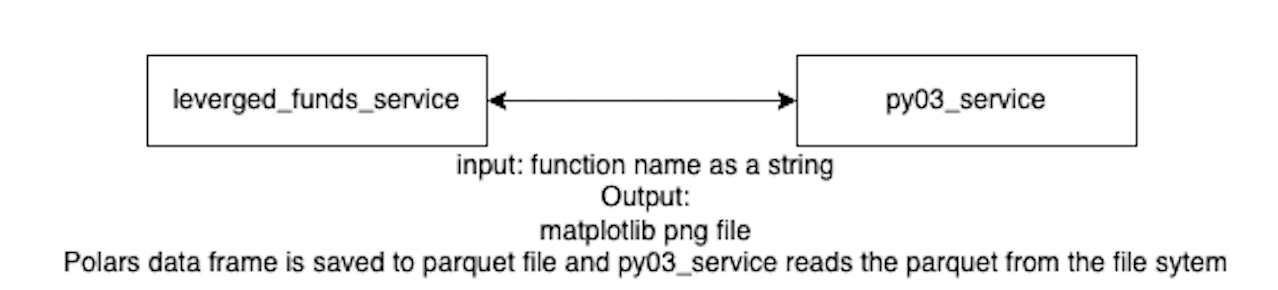

Session 3

Dataflow Diagram

Topics Discussed

- Py03 integration

- Pass polars dataframe to Python

- Review matplotlib output

Video explanation

Source Code

Session 4

Dataflow Diagram

Topics Discussed

- Config struct to parse environment variables

- Ticker plant send the output on the NSQ backend-node topic

Video explanation

Session 5

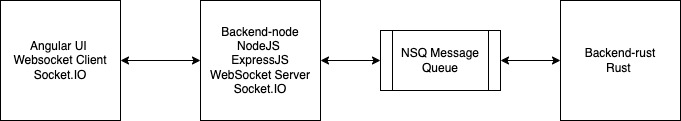

Dataflow Diagram

Topics Discussed

- Demo:

- Angular UI sends a message to the backend-node over socket.io websockets

- Backend-node server receives the message on the socket.io websocket

- Backed-node sends the message to NSQ Server on the backend-rust topic

- Backend-rust receives the message on the NSQ Server backend-rust topic

- Backend-rust process the message

- Backend-rust send the response to NSQ Server on the backend-node topic

- Backend-node receives the response message on the backend-node topic

- Backend-node sends the response message on WebSocket Socket.IO to Angular UI

- Angular UI Debug Console shows the message received from the backend-rust

- Added config.toml support to pass extra config data to backend-rust

- Removed .expect and .unwrap usage which cause panics

- Use match pattern to match enun result pattern

- Error handling using

Box<dyn Error>