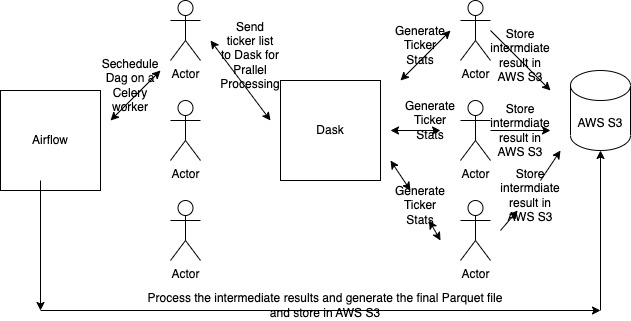

Use Case

Airflow -> Dask -> AWS S3 (Parquet File)

Use this program to generate statistical data for financial symbols. Airflow Dag submits 5000+ tickers to Dask Cluster. Dask Cluster generates the ticker historical statistics and uploads to AWS S3. Once the Dask Cluser futures return data to the Airflow Dag, Airflow Dag consolidates the individual ticker historical statistics into a single dataframe. Uses AWS Wrangler to convert the dataframe to a parquet file and uploads to AWS S3. Boost Beast Rest API server can be used to server the data in the parquet file via HTTP using AWS S3 Select.

Libraries/Tools Used

- Airflow

- Dask

- AWS Data Wrangler

Dataflow Diagram

Output File

Session 1

- Discuss environment setup

- Airflow Dag and Integration with Dask cluster

- Review the parquet file in AWS S3