Use Case

Airflow -> AWS S3 (JSON/Parquet File)

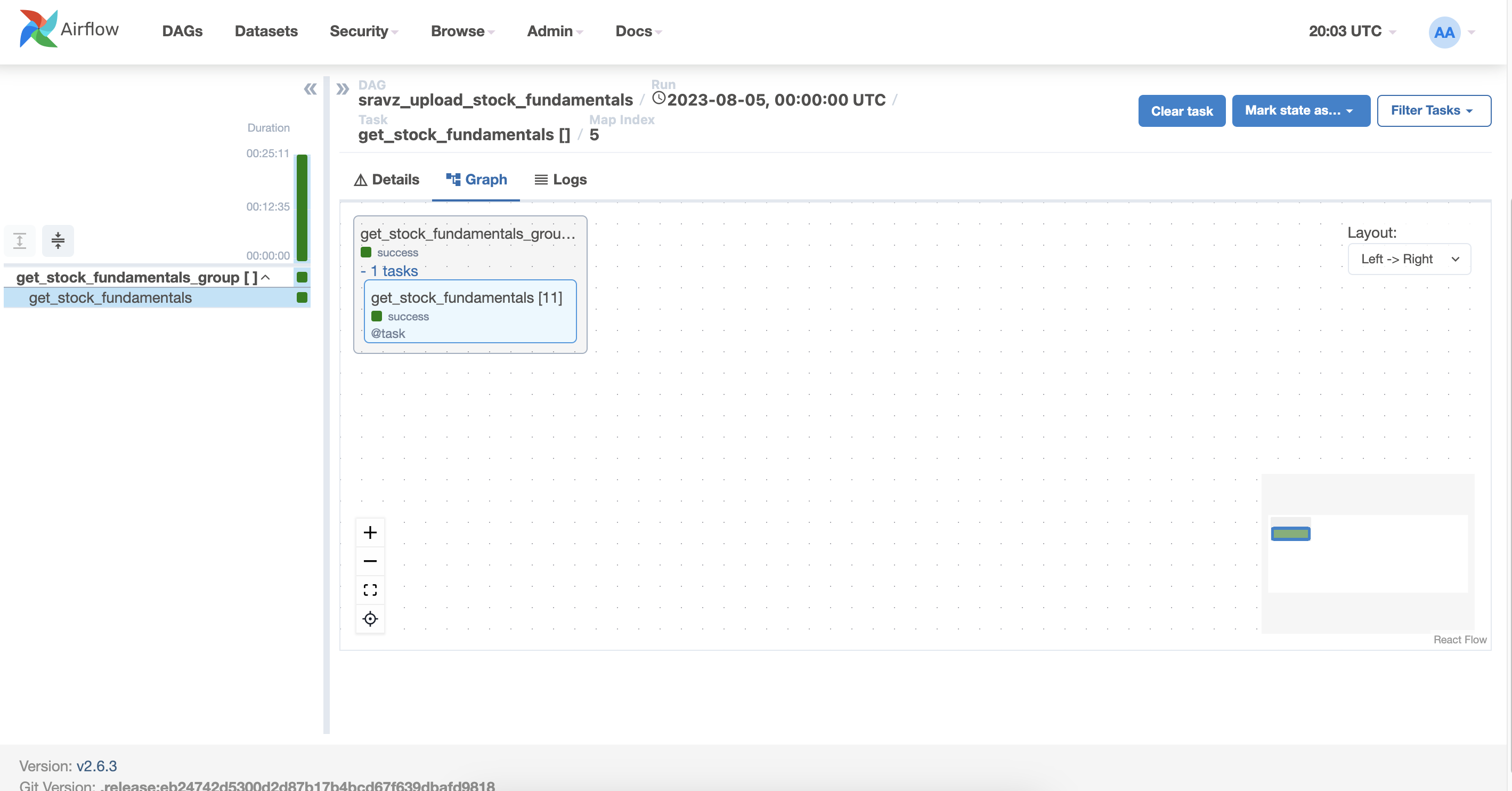

Use this program to upload stock fundamentals data and stock quotes stats to AWS S3. Airflow Dag submits 5000+ tickers to Airflow Celery works using Task/Task Group Mapping. Task groups/tasks perform http get from the service provider and upload the data to AWS S3.

Libraries/Tools Used

- Airflow

- AWS Data Wrangler

Dataflow Diagram

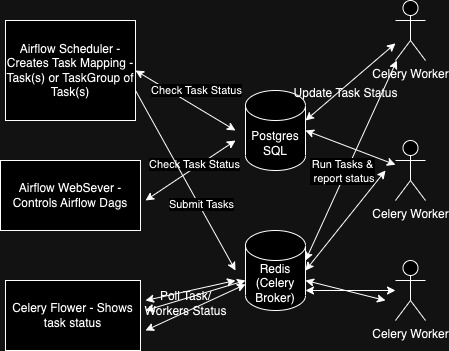

Airflow Architecture

Source: Airflow Documentation

Source: Airflow Documentation

Task Mapping Flow

Task Group

Output File

Session 1

- Discuss environment setup

- Airflow Task and Task Group

- Highlevel discuss of Dask Vs Airflow Task Mapping