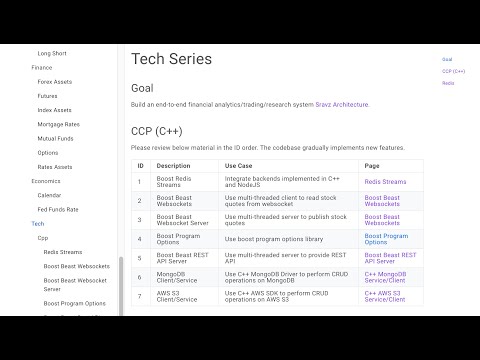

Use Case

Airflow -> AWS S3 (JSON/Parquet File)

Use this program to upload mutual funds fundamentals data to AWS S3.

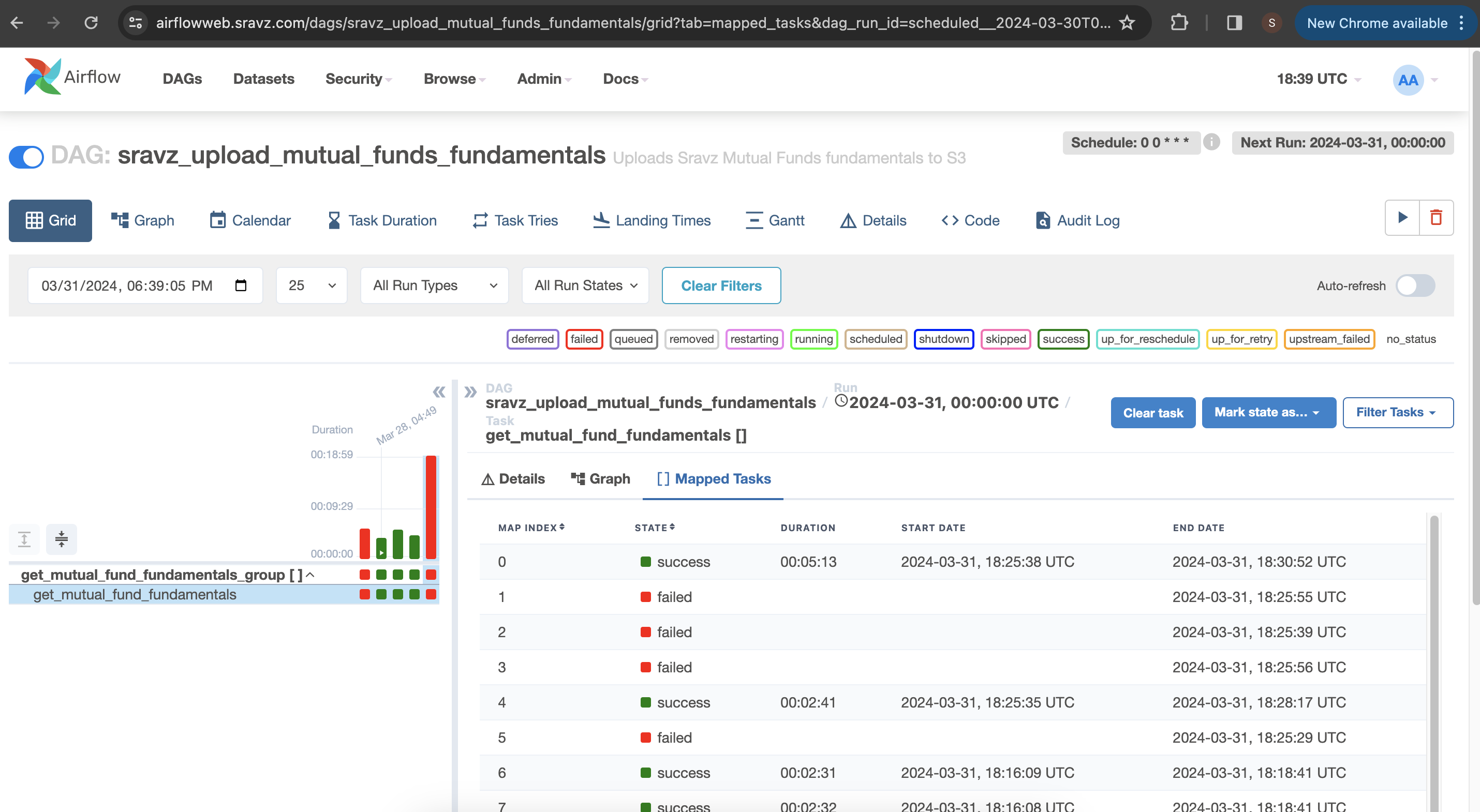

Airflow Dag submits tickers to Airflow Celery workers using Task/Task Group Mapping.

Task groups/tasks perform http get from the service provider and upload the data to AWS S3.

Libraries/Tools Used

- Airflow

Dataflow Diagram

Airflow Architecture

Source: Airflow Documentation

Source: Airflow Documentation

Task Mapping Flow

Output File

ubuntu@vmi1281458:~/workspace/sravz_repo$ aws --profile contabo --endpoint-url https://XXX.contabostorage.com s3 ls s3://XXX/mutual_funds/fundamentals/ | wc -l

2544

Session 1

- Discuss environment setup

- Airflow Task and Task Group

- Discuss the DAG

- Discuss the fundamentals uploader code